2) Auto Trimming Tutorial

Doubt sees the obstacles, faith sees the way; Doubt sees the dark of night, faith sees the day; Doubt dreads to take a step, faith soars on high. Doubt questions, "Who believes?" Faith answers, "I." To pursue childhood dream with faith and guidance from God, know that the plans He has for me will only prosper me and not to harm me; plans to give me hope and a future. (Jeremiah 29:11)

Wednesday 28 May 2014

Testing & Tuning Stage

1) Field Test using Custom-made Quadcopter

|

| Hexacopter mounted on the testing rack |

|

| Testing Rack built for testing hexacopter |

|

| Quadcopter built to simulate the hexacopter |

|

| Setting tuning parameters for PID Controller |

2) Field Test Video of Hexacopter (Initial Stage)

| Low Altitude Flight Test |

3) Setting Waypoint in Mission Planner and testing

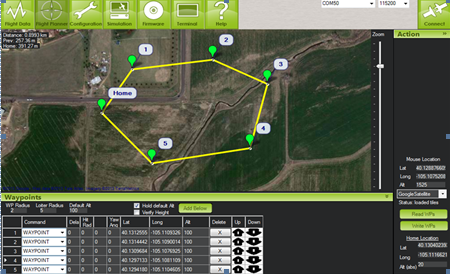

| Setting Waypoint |

| Waypoint Test |

4) Pid Tuning

Image Processing

1) Payload Release Looping

This is the procedural setup for activating the

payload and navigation system. The integration of image processing block

diagram from surveillance sensor to flight control system consists of the

object recognition algorithm.

The algorithm is

divided into image segmentation steps that take the colour and texture

information into account followed by a model-object detection step which includes

the shape, scale and context information. The image is then matched with

predefined target and used for data association and tracking with data acquired

from GPS.

Lastly, an overriding command is sent to flight controller to

activate the release mechanism as well as overriding the flight mode.

2) Image Segmentation

The image segmentation

stage divides the original image into three different classes based on the

colour and texture features. The images are collected in Red Green Blue (RGB)

colour space. They are then transformed into a Hue, Saturation and Value (HSV)

colour space to reduce the sensitivity towards change in light intensity.

After feature

extraction the colour and texture features are grouped into one single feature

vector consisting of three colour channels and thirty texture channels. Each

feature vector is then assigned with a label representing its class. The aim is

to segment the original image into three different classes, object, shadow and

background.

The

classifier converts the original colour images into images with meaningful

class labels.

3) Object Model Detection

Object

detection algorithms based purely on statistical information have their

performance limited by the quality of data. The algorithm used to generate a

target object outline is used as the prior knowledge for object detection where

a simple round shape is used to approximate the edge of ground target known as

edge matching. Any

potential object detections with the wrong shape and edges can be rejected.

4) Image Matching

The

image matching technique applied here is based on image segmentation with RGB

values and objects model definition with shape and edge matching. This method

is useful to detect predefined ground target. The higher the UAV flies, the

more structure from the environment can be captured and thus, image

registration is more reliable at higher altitude.

After

both methods have been processed, a matching algorithm tries to identify the

best match with the predefined image. The image obtained with highest

percentage of matching in terms of colour, shape and edges will validate the

criteria set for the activation of release mechanism.

Tuesday 27 May 2014

Hardware Design

1) Hardware Setup Diagram

For ground control

station, EasyCAP will be connected to laptop and wireless camera receiver.

Whereas, for air module, CCD Camera is installed at the bottom of hexacopter

air frame on a carbon-fibre landing gear. The landing gear will be equipped

with a custom-built bracket to mount the camera. Whereas, the video transmitter

will be installed above the hexacopter frame for better signal transmission.

2) Glance of pictures of before and after installation

Ground control station

1) MAVProxy

MAVProxy is

a MAVLink protocol proxy and ground station. It is a fully-functioning GCS for

UAV's and is oriented towards command-line operation. It is an extendable GCS

for any UAV supporting the MAVLink protocol such as ArduPilotMega (APM). It is

also a command-line, console-based application which runs in command prompt. It

is written in Python scripts and supports loadable modules which in turn support

consoles, moving maps, joysticks, antenna trackers and image processing module.

2) Mission Planner

Mission

Planner is a fully-featured ground control station. It is used for waypoint

entry using GPS and to select mission commands from drop-down menus. Mission

planner can also be used to download mission log files for analysing and to configure

APM settings for airframe. It is also used to set the tilting angle limit and

servo PWM limit for release mechanism.

3) Setting

servo PWM limits and angle limits

The picture below shows the snapshot of mission waypoint

entry using Mission Planner V1.2.55 through GPS. Whereas, Figure 3.11 shows the

setting of servo limits and tilting angle limits using Mission Planner. Both

parameters are set to RC6 as servo input channel and RC11 as output channel on

APM flight controller. Here, the lowest servo limit is set to 1100 whereas the

highest is set to 1900 according to the value shown in radio calibration. The

range of angle limits is set between -45 and +45 degree.

Imaging Tools and software

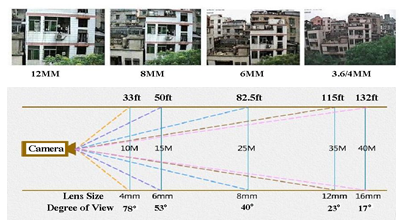

1) The

onboard CCD camera is tested to ensure its full functionabilty as provided in

camera specifications. It is tested with a 933MHz video transmitter (TX) and

receiver (RX) for wireless communication. The lens size and field of view is to

be measured experimentally. The picture below shows the 1/3 420L Sony 12V CCD camera used in image

capturing and the video transmitter and receiver used for

video signal transmission as well as the camera range and

degree of view detectable by CCD Camera according to its specifications.

2) A capture device is required to convert the analog video output from the wireless video receiver to a digital format that can be used by a video encoder named DV Driver. These capture devices are available as either internal capture cards or external devices that are connected via USB. EasyCAP DC60 which runs under STK1160 driver is used to capture video up to 720x576 pixels at 25fps (PAL) and 720x480 pixels at 30fps (NTSC). It enables four analog inputs of CVBS, S-VIDEO, AUDIO Left and Right.

3) Image processing is done using Python-SimpleCV where SimpleCV is an open source framework for building computer vision applications. With it, several high-powered computer vision libraries such as OpenCV can be accessed easily which makes computer vision easy. Whereas, Python is a widely used high-level programming language which emphasizes code readability and its syntax allows programmers to express concepts in fewer lines of code. Python supports multiple programming paradigms including object-oriented, imperative and functional programming styles. It is often used as a scripting language, for example in SimpleCV for computer vision applications.

Project Flow Chart

Above shows the flowchart of research

activities. The images taken from CCD camera is acquired via video transmitter and

transmit to wireless receiver of a ground control station. Image processing

algorithm is incorporated in Python Integrated Development Environment (IDE).

The imaging payload system is assembled on UAV platform as part of system

integration.

Radio Rx channel and servo payload parameters for release

mechanism is set in Mission Planner V2.2.55. Mavproxy is used as ground control

station to interface with Python-SimpleCV to process real-flight image data.

The captured image is processed with a pseudocode as given for the

identification of predefined target. Lastly, computer overriding signal will be

sent to flight controller for precision approach as well as release mechanism.

Subscribe to:

Posts (Atom)